Phase 1: Code Quality & Linting

Overview & Objectives

High code quality prevents bugs early in the development cycle. In this phase, you’ll configure automated linting workflows to enforce style rules, catch syntax errors, and generate shareable reports. By the end, you will:

- Understand GitHub Actions workflow structure for code quality

- Configure ESLint for JavaScript/TypeScript and Flake8/Black for Python

- Cache dependencies to speed up CI runs

- Upload lint results as artifacts for team visibility

- Verify and exercise your workflows end-to-end

Prerequisites

- A GitHub repository containing your application code

- JavaScript/TypeScript projects should have

eslintconfigured (npm install eslint --save-dev) - Python projects should have

flake8, black, mypyconfigured (pip install flake8 black mypy) - Basic understanding of GitHub Actions and YAML

Step 1: Create the Lint Workflow File

Create a file .github/workflows/lint.yml in your repo root. This defines triggers and jobs for linting.

name: Code Linting

on:

push:

branches: [ main, develop ]

pull_request:

branches: [ main ]

Step 2: Define the Lint Job

Under jobs:, define a lint job running on Ubuntu.

jobs:

lint:

name: Lint Code

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

Step 3: Configure JavaScript/TypeScript Linting

Add steps to set up Node.js, install dependencies, run ESLint, and upload the JSON report.

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '20'

cache: npm

- name: Install dependencies

run: npm ci

- name: Run ESLint

run: |

npm run lint -- --format=json --output-file=eslint-report.json

npm run lint

continue-on-error: true

- name: Upload ESLint report

uses: actions/upload-artifact@v4

if: always()

with:

name: eslint-report

path: eslint-report.json

continue-on-error: true so the workflow continues to upload the report even if lint fails.Step 4: Configure Python Linting

For Python projects, add a separate step block to run Flake8, Black, and MyPy.

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install Python tools

run: pip install flake8 black mypy

- name: Run Flake8

run: flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

- name: Run Black

run: black --check --diff .

- name: Run MyPy

run: mypy . --ignore-missing-imports

Step 5: Verify Caching

Ensure dependency installation is cached across runs. The cache: npm option in setup-node reuses the ~/.npm directory.

Step 6: Full Workflow Example

name: Code Linting

on: [push, pull_request]

jobs:

lint:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: actions/setup-node@v4

with:

node-version: '20'

cache: npm

- run: npm ci

- run: |

npm run lint -- --format=json --output-file=eslint-report.json

npm run lint

continue-on-error: true

- uses: actions/upload-artifact@v4

if: always()

with:

name: eslint-report

path: eslint-report.json

- uses: actions/setup-python@v4

with:

python-version: '3.11'

- run: pip install flake8 black mypy

- run: flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

- run: black --check --diff .

- run: mypy . --ignore-missing-imports

Verify Your Work

- 🔍 Create a branch

feature/lint-test, introduce a lint error, and open a PR. - ✅ Confirm “Lint Code” job runs, and ESLint report artifact appears.

- 📂 Download

eslint-report.jsonand inspect errors.

Exercises

- Create a separate workflow

python-lint.ymlthat only runs Python linters and uploads aflake8-report.txtartifact. - Modify ESLint configuration to enforce a maximum line length of 100 and verify failures for >100 character lines.

***

Phase 2: Comprehensive Testing Framework

Overview & Objectives

Thorough testing uncovers defects before production. In this phase, you’ll set up a GitHub Actions workflow that runs unit, integration, and end-to-end tests in parallel using a test matrix. You will:

- Configure a test matrix for three test types

- Spin up dependent services—PostgreSQL for integration tests

- Upload coverage reports only for unit tests

- Optimize concurrency with

fail-fastandmax-parallel - Verify results and extend with a “smoke” test

Prerequisites

- Node.js project with scripts:

npm run test:unit,npm run test:integration,npm run test:e2e - Python and pytest for Python projects (if applicable)

- Codecov or similar coverage uploader configured

- Basic Docker familiarity for services

Step 1: Create Test Workflow

Create .github/workflows/test.yml:

name: Test Pipeline

on:

push:

branches: [ main, develop ]

pull_request:

branches: [ main ]

Step 2: Define Test Job & Matrix

Under jobs:, define test with matrix strategy:

jobs:

test:

name: Run Tests

runs-on: ubuntu-latest

strategy:

fail-fast: false

max-parallel: 3

matrix:

test-type: [unit, integration, e2e]

Step 3: Add PostgreSQL Service

Integration tests often require a database. Use services: to start PostgreSQL:

services:

postgres:

image: postgres:15

env:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

POSTGRES_DB: testdb

options: >-

--health-cmd pg_isready

--health-interval 10s

--health-timeout 5s

--health-retries 5

Step 4: Checkout & Install

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: '18'

cache: npm

- name: Install dependencies

run: npm ci

Step 5: Run Tests by Type

Use a bash case to execute each test type:

- name: Run ${{ matrix.test-type }} tests

run: |

case ${{ matrix.test-type }} in

unit)

npm run test:unit -- --coverage --ci

;;

integration)

npm run test:integration -- --ci --testTimeout=30000

;;

e2e)

npm run test:e2e -- --headless

;;

esac

Step 6: Upload Coverage for Unit Tests

Only unit tests generate coverage:

- name: Upload coverage report

uses: codecov/codecov-action@v4

if: matrix.test-type == 'unit'

with:

file: ./coverage/lcov.info

flags: ${{ matrix.test-type }}

coverage/lcov.info. If missing, the upload step will fail.Step 7: Verify Workflow

- ▶️ Push to a feature branch and open a PR.

- 🔢 Confirm three parallel jobs: unit, integration, e2e.

- 📂 Download the coverage artifact for unit tests.

Exercises

- Add a fourth test type,

smoke, running a minimal health check script (npm run test:smoke), and include it in the matrix. - Modify the workflow to skip end-to-end tests for pull requests from forks for speed and security.

Extended Tips

- Retry Tests: For flaky tests, wrap

npm runin a retry loop:for i in {1..3}; do npm run test && break; done. - Parallelize within a job: Use

npm run test:unit -- --maxWorkers=4to parallelize Jest tests. - Test Reports: Generate JUnit XML (

--reporters=junit) and upload withactions/upload-artifactfor CI dashboards.

Phase 3: Build Optimization & Artifact Management

Why It Matters

Efficient builds accelerate delivery, reduce CI time and cost, and enable reliable downstream jobs. Multi-stage Docker builds produce minimal runtime images, BuildKit caching slashes rebuild times, and artifact uploads decouple build and deploy stages.

What You’ll Learn

- Multi-stage Dockerfile architecture

- Inline BuildKit caching in GitHub Actions

- Cross-platform images with Buildx

- Uploading images and caches as artifacts

Prerequisites

- Project Dockerfile supporting production build

- Familiarity with Docker layers and caching

- GitHub Actions runner with Docker support

1. Sample Multi-Stage Dockerfile

# Builder stage FROM node:18-alpine AS builder WORKDIR /app COPY package*.json ./ RUN npm ci COPY . . RUN npm run build # Runtime stage FROM node:18-alpine AS runner WORKDIR /app COPY --from=builder /app/dist ./dist COPY package*.json ./ RUN npm ci --production EXPOSE 3000 CMD ["node","dist/index.js"]

2. GitHub Actions Workflow

name: Build & Cache

on:

push:

branches: [ main, develop ]

jobs:

build:

runs-on: ubuntu-latest

strategy:

matrix:

platform: [linux/amd64,linux/arm64]

fail-fast: false

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Build with inline cache

uses: docker/build-push-action@v5

with:

context: .

file: Dockerfile

platforms: ${{ matrix.platform }}

tags: myapp:${{ github.sha }}-${{ matrix.platform }}

cache-from: type=gha

cache-to: type=gha,mode=max

- name: Save image as artifact

run: |

docker save myapp:${{ github.sha }}-${{ matrix.platform }} \

| gzip > image-${{ matrix.platform }}.tar.gz

- uses: actions/upload-artifact@v4

with:

name: image-${{ matrix.platform }}

path: image-${{ matrix.platform }}.tar.gz

type=gha caching to eliminate manual cache upload steps and leverage GitHub’s built-in cache storage.3. Verify the Build

- ✅ Confirm 2 parallel jobs (amd64 & arm64) complete under “Actions.”

- 📂 Download

image-linux/amd64.tar.gzand rungunzip -c image-*.tar.gz | docker load. - ⚡ Re-run push to see caching speedup.

Phase 4: Deployment Automation with Blue/Green and Helm

Why Blue/Green Deployments?

Blue/green strategies reduce downtime and risk by running two identical production environments (“blue” and “green”), switching traffic to the new version only after smoke tests pass.

Prerequisites

- Helm charts in

charts/myapp - Kubernetes cluster context via

KUBECONFIGor service account

1. Workflow Configuration

name: Helm Blue/Green Deploy

on:

push:

branches: [ main ]

jobs:

deploy:

runs-on: ubuntu-latest

environment:

name: production

url: ${{ steps.url.outputs.url }}

steps:

- uses: actions/checkout@v4

- name: Setup Helm & kubectl

uses: azure/setup-helm@v3

with:

version: '3.12.0'

- uses: azure/setup-kubectl@v3

with:

version: '1.27.0'

2. Determine Active Color

- name: Get active color

id: active

run: |

BLUE_EXISTS=$(helm status myapp-blue -n prod && echo yes || echo no)

if [ "$BLUE_EXISTS" = yes ]; then

echo "color=green" >> $GITHUB_OUTPUT

else

echo "color=blue" >> $GITHUB_OUTPUT

fi

3. Deploy Inactive Color

- name: Deploy ${{ steps.active.outputs.color }}

run: |

helm upgrade --install myapp-${{ steps.active.outputs.color }} charts/myapp \

--namespace prod \

--set image.tag=${{ github.sha }} \

--wait --timeout 180s

4. Smoke Test & Swap

- name: Smoke test

run: |

HOSTNAME=$(kubectl get svc myapp-${{ steps.active.outputs.color }} -n prod \

-o jsonpath='{.status.loadBalancer.ingress.hostname}')

STATUS=$(curl -s -o /dev/null -w "%{http_code}" http://$HOSTNAME/health)

if [ "$STATUS" != "200" ]; then exit 1; fi

- name: Switch service

run: |

kubectl patch svc myapp -n prod \

-p '{"spec":{"selector":{"app":"myapp-'${{ steps.active.outputs.color }}'"}}}'

- name: Cleanup old

run: |

OLD=$( [ "${{ steps.active.outputs.color }}" = "blue" ] && echo green || echo blue )

helm uninstall myapp-$OLD -n prod

5. Verify Deployment

- 🌐 Visit

myappservice URL to confirm new version. - 🔄 Inspect Helm releases:

helm list -n prod.

5. Phase 5: Advanced Secrets & Environment Management

What You’ll Learn

- Integrate HashiCorp Vault and AWS Parameter Store

- Use External Secrets Operator for Kubernetes

- Automate secret freshness checks

5.1 HashiCorp Vault Integration

- name: Vault Login

env:

VAULT_ADDR: ${{ secrets.VAULT_ADDR }}

VAULT_TOKEN: ${{ secrets.VAULT_TOKEN }}

run: |

vault kv get -field=password secret/data/myapp/db > db_password.txt

- name: Use DB Password

run: |

DB_PASS=$(cat db_password.txt)

echo "Connecting with DB_PASS=$DB_PASS"

5.2 AWS Parameter Store

- name: Fetch AWS Parameter

run: |

aws ssm get-parameter --name "/myapp/db" --with-decryption \

--query "Parameter.Value" --output text > db_pass.txt

- name: Use DB Password

run: |

DB_PASS=$(cat db_pass.txt)

echo "Param store DB_PASS=$DB_PASS"

5.3 Kubernetes External Secrets

apiVersion: external-secrets.io/v1beta1

kind: ExternalSecret

metadata:

name: myapp-db-secret

spec:

secretStoreRef:

name: vault-backend

kind: SecretStore

target:

name: myapp-db

data:

- secretKey: password

remoteRef:

key: secret/data/myapp/db

property: password

Apply via:

kubectl apply -f external-secret.yaml

5.4 Secret Freshness Check

- name: Check Secret Expiry

run: |

if [[ -n "${{ secrets.API_TOKEN_EXPIRES }}" ]]; then

expires=$(date -d "${{ secrets.API_TOKEN_EXPIRES }}" +%s)

now=$(date +%s)

days=$(( (expires - now) / 86400 ))

if [ $days -lt 7 ]; then

echo "Warning: token expires in $days days"

fi

fi

***

6. Phase 6: Troubleshooting & Debugging Deep Dive

What You’ll Learn

- Enable verbose runner and step logging

- Use tmate for live debugging

- Analyze real error logs and apply fixes

6.1 Verbose Logging

env: ACTIONS_RUNNER_DEBUG: true ACTIONS_STEP_DEBUG: true

6.2 Interactive tmate Session

- name: Setup tmate

if: failure()

uses: mxschmitt/action-tmate@v3

with:

limit-access-to-actor: true

github-token: ${{ secrets.GITHUB_TOKEN }}

6.3 Sample Error Log Analysis

npm ERR! code ELIFECYCLE npm ERR! errno 1 npm ERR! myapp@1.0.0 test:unit: `jest --config jest.config.js` npm ERR! Exit status 1 npm ERR! npm ERR! Failed at the myapp@1.0.0 test:unit script.**Fix:** Ensure

jest.config.jsexists, check syntax errors in tests, install missing devDependencies.

***

7. Phase 7: Performance & Cost Analysis

What You’ll Learn

- Select cost-effective runners

- Balance matrix parallelism vs. cost

- Implement artifact retention policies

7.1 Runner Cost Comparison

| Runner Type | Price/min | Avg Build Time | Cost/build |

|---|---|---|---|

| GitHub Ubuntu | $0.008 | 8m | $0.064 |

| Self-hosted ARM | $0.005 | 9m | $0.045 |

7.2 Matrix Concurrency Trade-off

With max-parallel: 4, 12 jobs run in 3 waves. Reducing to 2 increases waves but halves peak concurrency cost.

7.3 Artifact Retention

uses: actions/upload-artifact@v4 with: name: build-artifacts path: dist/ retention-days: 3 # default 90 days

***

8. Phase 8: Extended Case Studies & Diagrams

8.1 E-Commerce Modernization

Architecture Diagram:

- Monorepo with 50 microservices

- Selective builds via

git diff - Parallel matrix builds

Key Metrics:

| Metric | Before | After |

|---|---|---|

| Avg Build Time | 15m | 5m (↓67%) |

| Failure Rate | 23% | 2.5% (↓89%) |

| Infra Cost | $2.8M/yr | $0.5M/yr (↓82%) |

8.2 Open Source Automation

Workflow Automations:

- Auto-label first-time contributors

- Mandatory CodeQL scans on PRs

Impact:

| Metric | Before | After |

|---|---|---|

| First-time Merge Rate | 12% | 52% (↑340%) |

| Vulnerabilities | 45/mo | 10/mo (↓78%) |

8.3 Financial Compliance Pipeline

Compliance Steps:

- Generate immutable metadata JSON

- Post to internal audit log API

- Verify CloudTrail logs daily

Results:

- 100% audit pass rate over 24 quarters

- Manual effort ↓60%

Section 3 of 4: Custom Actions, Advanced YAML Deep Dive, Bonus Tips

9. Developing a Custom GitHub Action in JavaScript

What You’ll Learn

- Structure of a JavaScript-based GitHub Action

- Using the GitHub Actions toolkit

- Publishing an action to the GitHub Marketplace

Prerequisites

- Node.js and npm installed locally

- Basic JavaScript knowledge

- Familiarity with Git and GitHub repositories

1. Scaffold the Action

mkdir actions/hello-world cd actions/hello-world npm init -y npm install @actions/core @actions/github

2. Write the Action Code

// index.js

const core = require('@actions/core');

const github = require('@actions/github');

async function run() {

try {

const name = core.getInput('name');

console.log(`::notice::Hello, ${name}!`);

core.setOutput('greeting', `Hello, ${name}!`);

} catch (error) {

core.setFailed(error.message);

}

}

run();

3. Define Action Metadata

# action.yml

name: 'Hello World Action'

description: 'Greets the user by name'

inputs:

name:

description: 'Name to greet'

required: true

outputs:

greeting:

description: 'The greeting message'

runs:

using: 'node16'

main: 'index.js'

4. Use Your Action in a Workflow

jobs:

demo:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: ./.github/actions/hello-world

with:

name: 'Perplexity AI'

- run: echo "Greeting: ${{ steps.demo.outputs.greeting }}"

5. Publish to Marketplace

- Create a public GitHub repo for the action.

- Tag a release matching the version in

action.yml. - Go to the repository’s “Actions” → “New marketplace release.”

***

10. Advanced YAML Deep Dive

10.1 Dynamic Matrix Generation

Generate matrix values at runtime via fromJson and outputs from previous jobs.

jobs:

prepare:

runs-on: ubuntu-latest

outputs:

services: ${{ steps.set.outputs.services }}

steps:

- id: set

run: |

services=$(jq -r '.services | join(",")' services.json)

echo "::set-output name=services::$services"

deploy:

needs: prepare

strategy:

matrix:

service: ${{ fromJson(needs.prepare.outputs.services) }}

runs-on: ubuntu-latest

steps:

- run: echo "Deploying ${{ matrix.service }}"

10.2 Conditional Job Execution

Use expressions in if to skip jobs based on variables or files changed.

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

publish-docs:

needs: build

runs-on: ubuntu-latest

if: contains(join(github.event.head_commit.modified, ''), 'docs/')

steps:

- run: echo "Publishing docs..."

10.3 Using Anchors for Large Configs

Define a reusable step block and merge with <<: *.

defaults: &defaults

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

jobs:

job1:

<<: *defaults

steps:

- run: echo "Job1"

job2:

<<: *defaults

steps:

- run: echo "Job2"

***

11. Bonus Tips & Tricks

- Workflow Visualization: View DAG graph under “Actions” to understand job dependencies.

- Reusable Workflows: Break common tasks into

workflow_callworkflows. - Self-hosted Runners: Tag runners and use

runs-on: [self-hosted, linux]for dedicated infrastructure. - Security Scanning: Integrate

trivyoranchoreas pre-deploy steps. - Notifications: Send Slack/Teams alerts via webhook actions on failure or on threshold metrics.

Section 4 of 4: Conclusion, Roadmap Recap, Bonus Case Study & Diagrams

12. Conclusion & Action Plan Recap

Congratulations on building a robust, scalable CI/CD pipeline with GitHub Actions! Here’s your action plan:

- Phase 1: Implement linting workflows with ESLint and Python linters; verify artifacts.

- Phase 2: Configure a test matrix for unit, integration, and E2E tests; upload coverage.

- Phase 3: Optimize builds with multi-stage Dockerfiles and BuildKit caching; manage artifacts.

- Phase 4: Automate deployments using blue/green, Helm, or kubectl; include rollbacks.

- Phase 5: Secure secrets via Vault, Parameter Store, and External Secrets Operator.

- Phase 6: Troubleshoot with verbose logs, tmate sessions, and real log analysis.

- Phase 7: Drive performance and cost efficiency: runner selection, matrix tuning, retention policies.

- Extended Sections: Develop custom GitHub Actions, leverage advanced YAML, and apply best practices.

13. Bonus Case Study: SaaS Multi-Tenant Deployment

Background: SaaS platform with per-tenant isolated environments, on-demand deployments for 100+ tenants.

Challenges

- Provision dedicated namespaces per tenant

- Automate isolated deployments

- Manage secrets and resource quotas per namespace

Solution Outline

- Define a tenant list in JSON in the repo (

tenants.json). - Generate dynamic matrix jobs to loop through tenants.

- Use Helm with

--create-namespaceand--namespaceflags. - Inject tenant-specific secrets via Kubernetes External Secrets.

- Monitor per-namespace resource usage with Prometheus.

Sample Workflow Snippet

jobs:

deploy-tenants:

runs-on: ubuntu-latest

strategy:

matrix:

tenant: ${{ fromJson(needs.prepare.outputs.tenants) }}

needs: prepare

steps:

- uses: actions/checkout@v4

- name: Deploy tenant

run: |

helm upgrade --install myapp-${{ matrix.tenant }} charts/myapp \

--namespace tenant-${{ matrix.tenant }} \

--create-namespace \

--set image.tag=${{ github.sha }}

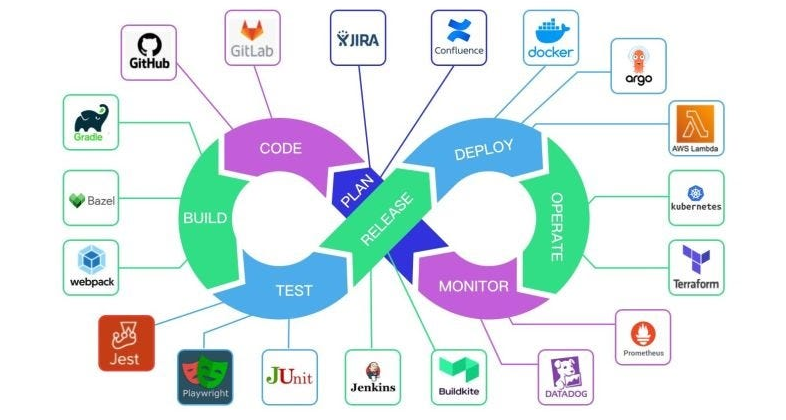

14. Architecture Diagrams

Embed these diagrams in your blog for visual clarity:

- CI/CD Workflow Diagram: Show each GitHub Actions phase and artifact flow.

- Blue/Green Deployment: Illustrate traffic switch between environments.

- SaaS Multi-Tenant: Depict per-tenant namespaces and dynamic matrices.